Error converting content: marked is not a function

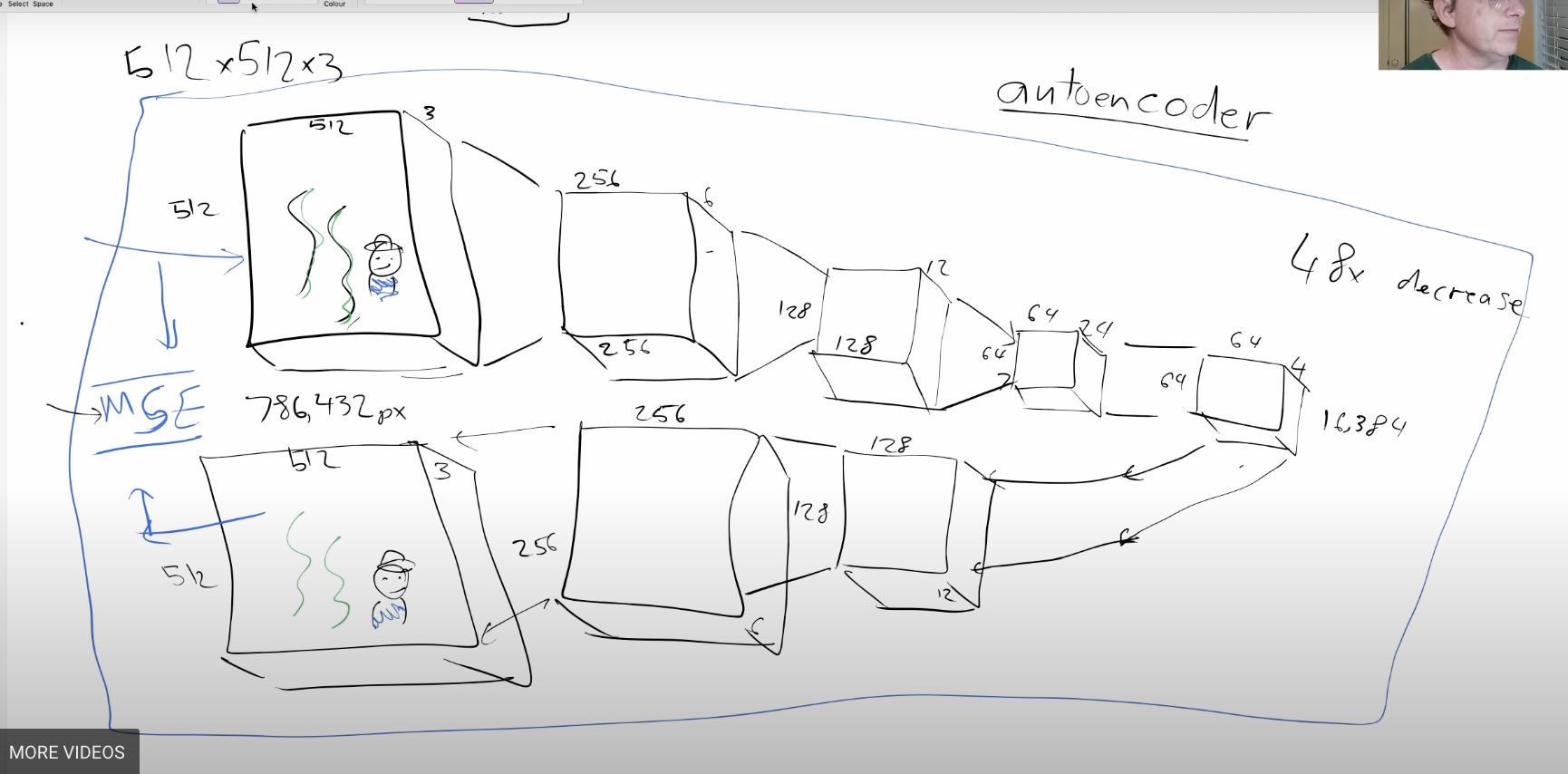

- Building Stable Diffusion from Scratch [Fast.AI Course](https://course.fast.ai/Lessons/part2.html) by @Jeremy Howard - [Lesson 9 - Stable Diffusion](https://course.fast.ai/Lessons/lesson9.html) collapsed:: true - Recorded October 19th, 2022 - {{video https://www.youtube.com/watch?v=_7rMfsA24Ls}} - Chapters collapsed:: true - [0:00](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=0s) - Introduction [6:38](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=398s) - This course vs DALL-E 2 [10:38](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=638s) - How to take full advantage of this course [12:14](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=734s) - Cloud computing options [14:58](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=898s) - Getting started (Github, notebooks to play with, resources) [20:48](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=1248s) - Diffusion notebook from Hugging Face [26:59](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=1619s) - How stable diffusion works [30:06](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=1806s) - Diffusion notebook (guidance scale, negative prompts, init image, textual inversion, Dreambooth) [45:00](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=2700s) - Stable diffusion explained [53:04](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=3184s) - Math notation correction [1:14:37](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=4477s) - Creating a neural network to predict noise in an image [1:27:46](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=5266s) - Working with images and compressing the data with autoencoders [1:40:12](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=6012s) - Explaining latents that will be input into the unet [1:43:54](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=6234s) - Adding text as one hot encoded input to the noise and drawing (aka guidance) [1:47:06](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=6426s) - How to represent numbers vs text embeddings in our model with CLIP encoders [1:53:13](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=6793s) - CLIP encoder loss function [2:00:55](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=7255s) - Caveat regarding "time steps" [2:07:04](https://www.youtube.com/watch?v=_7rMfsA24Ls&t=7624s) Why don’t we do this all in one step? - Timestamped Notes collapsed:: true - Overview lecture. Uses stable_diffusion.ipynb. - The second half of the lesson covers the key concepts involved in Stable Diffusion: collapsed:: true - CLIP embeddings - The VAE (variational autoencoder) - Predicting noise with the unet - Removing noise with schedulers - {{youtube-timestamp 908}} lec stars here [diffusion notebooks repo](https://github.com/fastai/diffusion-nbs) - {{youtube-timestamp 2307}} finetuning example by create pokemon dataset - {{youtube-timestamp 2489}} texual inversion and dreambooth - {{youtube-timestamp 2726}} Code and Deep dive starts here - {{youtube-timestamp 2981}} Take a function can detect a digit, and turn into a function that can generate the digit! The magic - {{youtube-timestamp 4680}} When we need a black box. We trian a neural net! #nice - {{youtube-timestamp 5166}} unet - first component of stable diffusion collapsed:: true - in somewhat noisy image; out noise - {{youtube-timestamp 5395}} 512 x 512 RGB image; storing pixel values performant way - {{youtube-timestamp 5839}} autoencoder (VAE) collapsed:: true - {:height 255, :width 627} - - - revist collapsed:: true - how do we come up with loss function - from karpathy - - - Concepts discussed [](https://course.fast.ai/Lessons/lesson9.html#concepts-discussed) collapsed:: true - Stable Diffusion - Hugging Face’s Diffusers library - Pre-trained pipelines - Guidance scale - Negative prompts - Image-to-image pipelines - Finite differencing - Analytic derivatives - Autoencoders - Textual inversion - Dreambooth - Latents - U-Nets - Text encoders and image encoders - Contrastive loss function - CLIP text encoder - Deep learning optimizers - Perceptual loss - TODO [Lesson 9A video](https://www.youtube.com/watch?v=844LY0vYQhc)—Deep Dive— with [accompanying notebook](https://github.com/fastai/diffusion-nbs/blob/master/Stable%20Diffusion%20Deep%20Dive.ipynb) - TODO [Lesson 9B video](https://youtu.be/mYpjmM7O-30)—The Math of Diffusion - [Lesson 10 - Diving Deeper](https://course.fast.ai/Lessons/lesson10.html) collapsed:: true - Recorded Oct 19th, 2022 - {{video https://www.youtube.com/watch?v=6StU6UtZEbU}} - Concepts discussed [](https://course.fast.ai/Lessons/lesson10.html#concepts-discussed) collapsed:: true - Papers: - Progressive Distillation for Fast Sampling of Diffusion Models collapsed:: true - On Distillation of Guided Diffusion Models - Imagic - Tokenizing input text - CLIP encoder for embeddings - Scheduler for noise determination - Organizing and simplifying code - Negative prompts and callbacks - Iterators and generators in Python - Custom class for matrices - Dunder methods - Python data model - Tensors - Pseudo-random number generation - Wickman-Hill algorithm collapsed:: true - Random state in deep learning - Linear classifier using a tens - Youtube Chapters collapsed:: true - [0:00](https://www.youtube.com/watch?v=6StU6UtZEbU&t=0s) - Introduction [0:35](https://www.youtube.com/watch?v=6StU6UtZEbU&t=35s) - Showing student’s work over the past week. [6:04](https://www.youtube.com/watch?v=6StU6UtZEbU&t=364s) - Recap Lesson 9 [12:55](https://www.youtube.com/watch?v=6StU6UtZEbU&t=775s) - Explaining “Progressive Distillation for Fast Sampling of Diffusion Models” & “On Distillation of Guided Diffusion Models” [26:53](https://www.youtube.com/watch?v=6StU6UtZEbU&t=1613s) - Explaining “Imagic: Text-Based Real Image Editing with Diffusion Models” [33:53](https://www.youtube.com/watch?v=6StU6UtZEbU&t=2033s) - Stable diffusion pipeline code walkthrough [41:19](https://www.youtube.com/watch?v=6StU6UtZEbU&t=2479s) - Scaling random noise to ensure variance [50:21](https://www.youtube.com/watch?v=6StU6UtZEbU&t=3021s) - Recommended homework for the week [53:42](https://www.youtube.com/watch?v=6StU6UtZEbU&t=3222s) - What are the foundations of stable diffusion? Notebook deep dive [1:06:30](https://www.youtube.com/watch?v=6StU6UtZEbU&t=3990s) - Numpy arrays and PyTorch Tensors from scratch [1:28:28](https://www.youtube.com/watch?v=6StU6UtZEbU&t=5308s) - History of tensor programming [1:37:00](https://www.youtube.com/watch?v=6StU6UtZEbU&t=5820s) - Random numbers from scratch [1:42:41](https://www.youtube.com/watch?v=6StU6UtZEbU&t=6161s) - Important tip on random numbers via process forking - My Timestamped Notes collapsed:: true - {{youtube-timestamp 436}} unet - nicer picture - {{youtube-timestamp 1507}} no idea what all this meant.- progressive distillation etc. Ignore the lecture up until now tbh - {{youtube-timestamp 1639}} Imagic paper - text based editing. Really cool - {{youtube-timestamp 2376}} still no idea what's happening in here; when do we start coding? :( - {{youtube-timestamp 3366}} ok we start with matrix multiplication here - {{youtube-timestamp 5208}} Good stuff so far; iterators magic to chunk and shape data! Now tensors. History of tensor aka arrays was good learning - {{youtube-timestamp 5857}} Random number from scratch starts here. My god! - {{youtube-timestamp 6530}} so lame; he kust showed pytorch rand() is > 30x faster; so won't use own rand(); then why do it? lol - Lesson Resources collapsed:: true - - Awesome tabular notes. [Fashion-MNIST reimplementation](https://mlops.systems/computervision/fastai/parttwo/2022/10/24/foundations-mnist-basics.html) of the lesson, with notes, - [Lesson 11 - Matrix multiplication](https://course.fast.ai/Lessons/lesson11.html) collapsed:: true - Recorded - Oct 25th, 2022 - {{video https://www.youtube.com/watch?v=_TMhP1VExVQ}} - Concepts Discussed collapsed:: true - Diffusion improvements - Interpolating between prompts for visually appealing transitions collapsed:: true - Improving the update process in text-to-image generation - Decreasing the guidance scale during image generation - Understanding research papers - Matrix multiplication using Python and Numba - Comparing APL with PyTorch - Frobenius norm - Broadcasting in deep learning and machine learning code - Youtube Chapter - [0:00](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=0s) - Introduction [0:20](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=20s) - Showing student’s work [13:03](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=783s) - Workflow on reading an academic paper [16:20](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=980s) - Read DiffEdit paper [26:27](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=1587s) - Understanding the equations in the “Background” section [46:10](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=2770s) - 3 steps of DiffEdit [51:42](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=3102s) - Homework [59:15](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=3555s) - Matrix multiplication from scratch [1:08:47](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=4127s) - Speed improvement with Numba library [1:19:25](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=4765s) - Frobenius norm [1:25:54](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=5154s) - Broadcasting with scalars and matrices [1:39:22](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=5962s) - Broadcasting rules [1:42:10](https://www.youtube.com/watch?v=Tf-8F5q8Xww&t=6130s) - Matrix multiplication with broadcasting - Timestamp Notes collapsed:: true - {{youtube-timestamp 1051}} Zotero and how to read a paper. Funny Logseq has an integration. - {:height 101, :width 810} - Ok Installed the app and safari extension . - {{youtube-timestamp 1549}} Reading paper DiffEdit. The filed of Tech/Semantic Image Editing - Key to reading paper - read abstract, see it makes sense, read results, then discard if not interested. Key is see if you understand what they are trying to achieve, if not, you won't understand the paper. - {{youtube-timestamp 1714}} scary paper bit - the math - {{youtube-timestamp 3129}} crux of the paper - wow; Hmm. What I am calling engineering the research papers just moves them to the lower level of abstraction hmm. - {{youtube-timestamp 3773}} break - {{youtube-timestamp 4460}} back from break - {{youtube-timestamp 4621}} back from break; Matrix Multiplication start here. Lol again - it's the second half; but I think first half was where good learning and absoption happened. - {{youtube-timestamp 6057}} APL and matrix stuff - Frobenius norm - {{youtube-timestamp 6649}} Broadcasting is blowing my mind - {{youtube-timestamp 7334}} matmul with broadcasting - - - Lesson Resources - - [Lession 12 - Mean shift clustering](https://course.fast.ai/Lessons/lesson12.html) collapsed:: true - {{video https://www.youtube.com/watch?v=_xIzPbCgutY&embeds_referring_euri=https%3A%2F%2Fcourse.fast.ai%2F&feature=emb_title}} - Concepts discussed [](https://course.fast.ai/Lessons/lesson13.html#concepts-discussed) collapsed:: true - Basic neural network architecture - Multi-Layer Perceptron (MLP) implementation - Gradients and derivatives - Chain rule and backpropagation - Python debugger (pdb) - PyTorch for calculating derivatives - ReLU and linear function classes - Log sum exp trick - `log_softmax()` function and cross entropy loss - Training loop for a simple neural network - YT Chapters collapsed:: true - [0:00](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=0s) - Introduction [0:15](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=15s) - CLIP Interrogator & how it works [10:52](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=652s) - Matrix multiplication refresher [11:59](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=719s) - Einstein summation [18:34](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=1114s) - Matrix multiplication put on to the GPU [33:31](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=2011s) - Clustering (Meanshift) [37:05](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=2225s) - Create Synthetic Centroids [41:47](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=2507s) - Mean shift algorithm [47:37](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=2857s) - Plotting gaussian kernels [53:33](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=3213s) - Calculating distances between points [57:42](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=3462s) - Calculating distances between points (illustrated) [1:04:25](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=3865s) - Getting the weights and weighted average of all the points [1:11:53](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=4313s) - Matplotlib animations [1:15:34](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=4534s) - Accelerating our work by putting it on the GPU [1:37:33](https://www.youtube.com/watch?v=_xIzPbCgutY&list=PLfYUBJiXbdtRUvTUYpLdfHHp9a58nWVXP&index=4&t=5853s) - Calculus refresher - Timestamp Notes collapsed:: true - {{youtube-timestamp 503}} clip interrogator and inverse problem - {{youtube-timestamp 645}} back to matrix mul; einsum - wtf, matrix mul is one line of code - {{youtube-timestamp 1136}} GPUs (CUDA). Wow. calculating one cell atomically - {{youtube-timestamp 2016}} phew out of the whole GPU thingy. Now more tensor manipulation muscle training. This is like learning times table hmm when delving into math. Agreed. We will practice it via implementing **Clustering algorithm** - {{youtube-timestamp 3158}} phew fun stuff playing wiht gaussian function and plotting it. Now we move to calculating distance and move points - {{youtube-timestamp 3494}} euclidean distance - {{youtube-timestamp 4220}} wow, writing all the learnings in 4 lines of mean shift algo code - distance, weighted avg, update and repeat - {{youtube-timestamp 4435}} animation fun :) - {{youtube-timestamp 4582}} accelerating this with GPU - {{youtube-timestamp 5245}} holy magic! einsum came to show us matirx multiplcation is magic - {{youtube-timestamp 5608}} end of meanshift with batch and gpu; homework and other chatter - {{youtube-timestamp 5802}} Calculus :) #Math/Calculus - - - - Lecture Resources - [Discuss this lesson](https://forums.fast.ai/t/lesson-12-official-topic/101702) - [CLIP Interrogator](https://huggingface.co/spaces/pharma/CLIP-Interrogator) - [Essence of calculus](https://www.youtube.com/watch?v=WUvTyaaNkzM) (3blue1brown) - [Introduction to Derivatives](https://www.mathsisfun.com/calculus/derivatives-introduction.html) - [Intuitively Approaching Einstein Summation Notation 16](https://forbo7.github.io/forblog/posts/16_einstein_summation_notation.html) by [@ForBo7](https://forums.fast.ai/u/forbo7) - [Implementing and Optimizing Meanshift Clustering 8](https://forbo7.github.io/forblog/posts/17_meanshift_clustering.html) by [@ForBo7](https://forums.fast.ai/u/forbo7) - Homework - K means clustering - [Lesson 13 - Backpropagation & MLP](https://course.fast.ai/Lessons/lesson13.html) collapsed:: true - {{video https://www.youtube.com/watch?v=vGdB4eI4KBs}} - My Timestamp Notes - {{youtube-timestamp 628}} mind blown in next 5 minutes as I realized that with hidden layers NN we are really just trying to curve the straight line! - {{youtube-timestamp 1057}} wow; model, multi layer perceptron in 3 line function for real - {{youtube-timestamp 1662}} derivatives for SGD; interesting new learning - we get matrix of derivates - {{youtube-timestamp 2285}} derivating visuals - {{youtube-timestamp 2985}} python debugger pdb to understand backprop - {{youtube-timestamp 3323}} stopping here - {{youtube-timestamp 3621}} ok using forward and backword from pytorch now, the code is clunky to say the least - {{youtube-timestamp 3652}} refactor starts here; ok - {{youtube-timestamp 4647}} wow, last 5 minutes were the end scene of SAW movie; pytorch nn.module and creating neural nets and forward and backwwards all just clicked even better on how it's done programmatically! #nice - {{youtube-timestamp 4694}} notebook 04 starts - training the model with mini batch - {{youtube-timestamp 4965}} how to read and write equations in latex - it's apparently easy to learn - {{youtube-timestamp 5621}} many sofmax math tricks and simplification - {{youtube-timestamp 5834}} not idea what's happening here; ok this is negative log likelyhood log - under the hood; might need some time to grok if it's worth it; for later - {{youtube-timestamp 5923}} Basic training loop - - - - Lesson Resources - [Discuss this lesson](https://forums.fast.ai/t/lesson-13-official-topic/101876) - [The Intuitive Notion of the Chain Rule](https://webspace.ship.edu/msrenault/geogebracalculus/derivative_intuitive_chain_rule.html) - [The Matrix Calculus You Need For Deep Learning](https://explained.ai/matrix-calculus/) - [Part 1 Excel workbooks](https://github.com/fastai/course22/tree/master/xl) - [Calculus help topic](https://forums.fast.ai/t/calculus-help-topic/102020) - [Simple Neural Net Backward Pass](https://nasheqlbrm.github.io/blog/posts/2021-11-13-backward-pass.html) - - [Backpropagation Explained using English Words* 3](https://forbo7.github.io/forblog/posts/18_backprop_from_scratch.html) by [@ForBo7](https://forums.fast.ai/u/forbo7) - [Lesson 14 - Backpropagation](https://course.fast.ai/Lessons/lesson14.html) collapsed:: true - {{video https://www.youtube.com/watch?v=veqj0DsZSXU}} - Concepts Discussed collapsed:: true - Backpropagation and the chain rule - Refactoring code for efficiency and flexibility - PyTorch’s `nn.Module` and `nn.Sequential` - Creating custom PyTorch modules - Implementing optimizers, DataLoaders, and Datasets - Working with Hugging Face datasets - Using nbdev to create Python modules from Jupyter notebooks - `**kwargs` and delegates - Callbacks and dunder methods in Python’s data model - Building a proper training loop using PyTorch DataLoader - - Youtube Chapters collapsed:: true - [0:00:00](https://www.youtube.com/watch?v=veqj0DsZSXU&t=0s) - Introduction [0:00:30](https://www.youtube.com/watch?v=veqj0DsZSXU&t=30s) - Review of code and math from Lesson 13 [0:07:40](https://www.youtube.com/watch?v=veqj0DsZSXU&t=460s) - f-Strings [0:10:00](https://www.youtube.com/watch?v=veqj0DsZSXU&t=600s) - Re-running the Notebook - Run All Above [0:11:00](https://www.youtube.com/watch?v=veqj0DsZSXU&t=660s) - Starting code refactoring: torch.nn [0:12:48](https://www.youtube.com/watch?v=veqj0DsZSXU&t=768s) - Generator Object [0:13:26](https://www.youtube.com/watch?v=veqj0DsZSXU&t=806s) - Class MLP: Inheriting from nn.Module [0:17:03](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1023s) - Checking the more flexible refactored MLP [0:17:53](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1073s) - Creating our own nn.Module [0:21:38](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1298s) - Using PyTorch’s nn.Module [0:23:51](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1431s) - Using PyTorch’s nn.ModuleList [0:24:59](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1499s) - reduce() [0:26:49](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1609s) - PyThorch’s nn.Sequential [0:27:35](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1655s) - Optimizer [0:29:37](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1777s) - PyTorch’ optim and get_model() [0:30:04](https://www.youtube.com/watch?v=veqj0DsZSXU&t=1804s) - Dataset [0:33:29](https://www.youtube.com/watch?v=veqj0DsZSXU&t=2009s) - DataLoader [0:35:53](https://www.youtube.com/watch?v=veqj0DsZSXU&t=2153s) - Random sampling, batch size, collation [0:40:59](https://www.youtube.com/watch?v=veqj0DsZSXU&t=2459s) - What does collate do? [0:45:17](https://www.youtube.com/watch?v=veqj0DsZSXU&t=2717s) - fastcore’s store_attr() [0:46:07](https://www.youtube.com/watch?v=veqj0DsZSXU&t=2767s) - Multiprocessing DataLoader [0:50:36](https://www.youtube.com/watch?v=veqj0DsZSXU&t=3036s) - PyTorch’s Multiprocessing DataLoader [0:53:55](https://www.youtube.com/watch?v=veqj0DsZSXU&t=3235s) - Validation set [0:56:11](https://www.youtube.com/watch?v=veqj0DsZSXU&t=3371s) - Hugging Face Datasets, Fashion-MNIST [1:01:55](https://www.youtube.com/watch?v=veqj0DsZSXU&t=3715s) - collate function [1:04:41](https://www.youtube.com/watch?v=veqj0DsZSXU&t=3881s) - transforms function [1:06:47](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4007s) - decorators [1:09:42](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4182s) - itemgetter [1:11:55](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4315s) - PyTorch’s default_collate [1:15:38](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4538s) - Creating a Python library with nbdev [1:18:53](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4733s) - Plotting images [1:21:14](https://www.youtube.com/watch?v=veqj0DsZSXU&t=4874s) - **kwargs and fastcore’s delegates [1:28:03](https://www.youtube.com/watch?v=veqj0DsZSXU&t=5283s) - Computer Science concepts with Python: callbacks [1:33:40](https://www.youtube.com/watch?v=veqj0DsZSXU&t=5620s) - Lambdas and partials [1:36:26](https://www.youtube.com/watch?v=veqj0DsZSXU&t=5786s) - Callbacks as callable classes [1:37:58](https://www.youtube.com/watch?v=veqj0DsZSXU&t=5878s) - Multiple callback funcs; *args and **kwargs [1:43:15](https://www.youtube.com/watch?v=veqj0DsZSXU&t=6195s) - *_dunder_* thingies [1:47:33](https://www.youtube.com/watch?v=veqj0DsZSXU&t=6453s) - Wrap-up - Timestamp Notes collapsed:: true - {{youtube-timestamp 137}} review of code from lesson 13 - {{youtube-timestamp 986}} nice nn.Module overview - {{youtube-timestamp 1105}} nn.Module behind the scenes with __setattr__ and __repr__ - {{youtube-timestamp 1530}} on **reduce** - {{youtube-timestamp 1656}} Optimizers - {{youtube-timestamp 1850}} dataset and dataloader, even more simplication! - {{youtube-timestamp 2150}} We got the code nice and concise. Now adding feature to it. **Random Sampling.** We do it with a **Sampler** - {{youtube-timestamp 2326}} Batch sampler - {{youtube-timestamp 2965}} multiprocessing started few minutes ago; fun programming stuff - {{youtube-timestamp 3101}} all those features with python. + nifty tricks - {{youtube-timestamp 3390}} ok notebook 05_datasets starts here. We learn getting datasets from huggingface. Let's now have some fun. - {{youtube-timestamp 3488}} loading data from hf dataset. We get really nice info - {{youtube-timestamp 3827}} magic of collation function - {{youtube-timestamp 3968}} pytorch can help so we don't need collate, as it know how ot convert dict to tensor stack - {{youtube-timestamp 4104}} @inplace trick - {{youtube-timestamp 4200}} itemgetter - {{youtube-timestamp 4440}} now we got tuple instead of dictionary - {{youtube-timestamp 4750}} Plotting - {{youtube-timestamp 4968}} matplotlib subplots - {{youtube-timestamp 5324}} Computer Science and Python foundation stuff - notebook 06_foundations - {{youtube-timestamp 6237}} `__dunder__` special things - Lesson Resources - [The Penultimate Guide to Dunder Methods](https://www.reddit.com/r/learnpython/comments/in1kg7/a_guide_to_pythons_dunder_methods/) - [Lecture 15 - Autoencoder](https://course.fast.ai/Lessons/lesson15.html) collapsed:: true - We start with a dive into convolutional autoencoders and explore the concept of convolutions. Convolutions help neural networks understand the structure of a problem, making it easier to solve. We learn how to apply a convolution to an image using a kernel and discuss techniques like im2col, padding, and stride. We also create a CNN from scratch using a sequential model and train it on the GPU. - We then attempt to build an autoencoder, but face issues with speed and accuracy. To address these issues, we introduce the concept of a `Learner`, which allows for faster experimentation and better understanding of the model’s performance. We create a simple `Learner` and demonstrate its use with a multi-layer perceptron (MLP) model. - Finally, we discuss the importance of understanding Python concepts such as try-except blocks, decorators, getattr, and debugging to reduce cognitive load while learning the framework being built. - {{video https://www.youtube.com/watch?v=0Hi2r4CaHvk&embeds_referring_euri=https%3A%2F%2Fcourse.fast.ai%2F&feature=emb_title}} - Concepts discussed [](https://course.fast.ai/Lessons/lesson15.html#concepts-discussed) collapsed:: true - Convolutional autoencoders - Convolutions and kernels - Im2col technique - Padding and stride in CNNs - Receptive field - Building a CNN from scratch - Creating a Learner for faster experimentation - Python concepts: try-except blocks, decorators, getattr, and debugging - Cognitive load theory in learning - Youtube Chapters collapsed:: true - [0:00:00](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=0s) - Introduction [0:00:51](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=51s) - What are convolutions? [0:06:52](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=412s) - Visualizing convolutions [0:08:51](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=531s) - Creating a convolution with MNIST [0:17:58](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=1078s) - Speeding up the matrix multiplication when calculating convolutions [0:22:27](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=1347s) - Pythorch’s F.unfold and F.conv2d [0:27:21](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=1641s) - Padding and Stride [0:31:03](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=1863s) - Creating the ConvNet [0:38:32](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=2312s) - Convolution Arithmetic. NCHW and NHWC [0:39:47](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=2387s) - Parameters in MLP vs CNN [0:42:27](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=2547s) - CNNs and image size [0:43:12](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=2592s) - Receptive fields [0:46:09](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=2769s) - Convolutions in Excel: conv-example.xlsx [0:56:04](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=3364s) - Autoencoders [1:00:00](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=3600s) - Speeding up fitting and improving accuracy [1:05:56](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=3956s) - Reminding what an auto-encoder is [1:15:52](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=4552s) - Creating a Learner [1:22:48](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=4968s) - Metric class [1:28:40](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=5320s) - Decorator with callbacks [1:32:45](https://www.youtube.com/watch?v=0Hi2r4CaHvk&t=5565s) - Python recap - Timestamp Notes - {{youtube-timestamp 72}} what are convolutions - {{youtube-timestamp 290}} pip install -e . - {{youtube-timestamp 354}} convolution intro basic idea from this [article](https://medium.com/impactai/cnns-from-different-viewpoints-fab7f52d159c) - {{youtube-timestamp 1028}} wow mind is blowing- this convolution is multiplying the matrix with matrix. Visuals make it awesome - {{youtube-timestamp 1174}} im2col - lol that's awesome; turning this carzy 6 loop multiplication to matrix multiplication. It's called **unfold** in pytorch - {{youtube-timestamp 1523}} conv2d function to make it easy; much faster with pytorch - {{youtube-timestamp 1604}} batch compute - 16 images, 4 kernels for 26 by 26 images. Grok this and you got machine learning lol - {{youtube-timestamp 1646}} how to avoid loosing pixel? with Padding and Stride. Fucking mind fuck - {{youtube-timestamp 1865}} Creating the CNN - mapping and understading this fits with our liner -> relu -> liner model - {{youtube-timestamp 2008}} key to understading: output of convo layer is an image - {{youtube-timestamp 2112}} training code with gpu stuff - {{youtube-timestamp 2309}} convo math - NCHW, batch x channel x height x width; channel-first - {{youtube-timestamp 2679}} excel workbook - wtf, convo in excel, #mindblown collapsed:: true - {:height 343, :width 759} - {{youtube-timestamp 3212}} trace precedent. What in the fucking fuck. @Jeremy Howard is a monster - - - - {{youtube-timestamp 3362}} convo auto encoder start here - 08_autoencoder nb - {{youtube-timestamp 3623}} warm up training - why is it running slowly? - {{youtube-timestamp 3719}} cpu usage with htop - {{youtube-timestamp 3784}} let's use more than 1 cpu - passing num_workers argument - {{youtube-timestamp 3906}} paperswithcode.com - find accuracy - {{youtube-timestamp 3985}} whiteboard - understanding deeply. ==I don't grok this :(== - {{youtube-timestamp 4536}} how not do stuff manually, so we rapidly try things and understand when things are working and when not. - {{youtube-timestamp 4563}} ==Create a Learner 09_Learner== - {{youtube-timestamp 4771}} Learner class that abstracts away fit function and all the bunch of manual iterations - {{youtube-timestamp 4982}} Metric class for metric calculation - {{youtube-timestamp 5217}} Callback Learner. Nice :) - {{youtube-timestamp 5560}} This is like a code walkthrough of the framework. Looks like Jeremy is teaching of his fastcore library? Either way it's just good to see why we need certain abstraction and classes - Learner etc. To see the problem etc. - {{youtube-timestamp 5762}} cognitive load theory #lol yess - - - - - - - - - - - - - - - - - - Lesson Resources collapsed:: true - Jeremy highly recommends this great intro to CNN - https://medium.com/impactai/cnns-from-different-viewpoints-fab7f52d159c - - - [Lecture 16 - The Learner Framework](https://course.fast.ai/Lessons/lesson16.html) collapsed:: true - {{video https://www.youtube.com/watch?v=9YZaYjRKuEc}} - Meta collapsed:: true - Very rich info here and last lecture. This the #engineering around ML. HOW to build and debug model training! This is it. - Concepts discussed [](https://course.fast.ai/Lessons/lesson16.html#concepts-discussed) collapsed:: true - Building a flexible training framework - Basic Callbacks Learner - Callbacks and exceptions (CancelFitException, CancelEpochException, CancelBatchException) - Metrics and MetricsCB callback - torcheval library - DeviceCB callback - Refactoring code with context managers - set_seed function - Analyzing the training process - PyTorch hooks - Histograms of activations - YT Chapters collapsed:: true - [0:00:00](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=0s) - The Learner [0:02:22](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=142s) - Basic Callback Learner [0:07:57](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=477s) - Exceptions [0:08:55](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=535s) - Train with Callbacks [0:12:15](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=735s) - Metrics class: accuracy and loss [0:14:54](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=894s) - Device Callback [0:17:28](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=1048s) - Metrics Callback [0:24:41](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=1481s) - Flexible Learner: @contextmanager [0:31:03](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=1863s) - Flexible Learner: Train Callback [0:32:34](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=1954s) - Flexible Learner: Progress Callback (fastprogress) [0:37:31](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=2251s) - TrainingLearner subclass, adding momentum [0:43:37](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=2617s) - Learning Rate Finder Callback [0:49:12](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=2952s) - Learning Rate scheduler [0:53:56](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=3236s) - Notebook 10 [0:54:58](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=3298s) - set_seed function [0:55:36](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=3336s) - Fashion-MNIST Baseline [0:57:37](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=3457s) 1 - Look inside the Model [1:02:50](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=3770s) - PyThorch hooks [1:08:52](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=4132s) - Hooks class / context managers [1:12:17](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=4337s) - Dummy context manager, Dummy list [1:14:45](https://www.youtube.com/watch?v=9YZaYjRKuEc&t=4485s) - Colorful Dimension: histogram - Timestamp notes collapsed:: true - {{youtube-timestamp 36}} jeremy says he is gonna take this lecture step by step and make it easy to understand. Thanks! - {{youtube-timestamp 128}} Basic Callback Learner - {{youtube-timestamp 661}} I am just not able ot pay attention :/ - Gonna skip through the video. I need multiple passes #revisit - {{youtube-timestamp 2304}} Subclassing learner - {{youtube-timestamp 2472}} nice visual and progress - {{youtube-timestamp 2501}} whiteboard - momentum - {{youtube-timestamp 2694}} learning rate finder - Debugging the model - {{youtube-timestamp 3253}} Activations ==How do we identify how our models are training?! Let's do it== via Hooks (same as callbacks) ==10_activations== - {{youtube-timestamp 3383}} Baseline - we wanna train as fast as possible i.e high learning rate - {{youtube-timestamp 3645}} when activations are close to 0; that's a disaster - {{youtube-timestamp 3988}} Hook class - {{youtube-timestamp 4512}} Histograms - {{youtube-timestamp 4584}} Whiteboard - What's going on in these pictures? - we see model is it's not working; too many activations gone off the rails - Lesson Resources - [Discuss this lesson](https://forums.fast.ai/t/lesson-16-official-topic/102472) - [Cyclical Learning Rates for Training Neural Networks - Leslie Smith](https://arxiv.org/abs/1506.01186) - [A disciplined approach to neural network hyper-parameters: Part 1 – learning rate, batch size, momentum, and weight decay - Leslie Smith](https://arxiv.org/abs/1803.09820) - [Methods for Automating Learning Rate Finders - Zach Mueller](https://www.novetta.com/2021/03/learning-rate/) - - - [Lecture 17 - Initialization / Normalization](https://course.fast.ai/Lessons/lesson17.html) collapsed:: true - {{video https://www.youtube.com/watch?v=vGsc_NbU7xc&embeds_referring_euri=https%3A%2F%2Fcourse.fast.ai%2F&feature=emb_title}} - Concepts discussed [](https://course.fast.ai/Lessons/lesson17.html#concepts-discussed) collapsed:: true - Callback class and TrainLearner subclass - HooksCallback and ActivationStats - Glorot (Xavier) initialization - Variance, standard deviation, and covariance - General ReLU activation function - Layer-wise Sequential Unit Variance (LSUV) - Layer Normalization and Batch Normalization - Instance Norm and Group Norm - Accelerated SGD, RMSProp, and Adam optimizers - Experimenting with batch sizes and learning rates - Timestamp Notes - YT Chapter collapsed:: true - [:00:00](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=0s) - Changes to previous lesson [0:07:50](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=470s) - Trying to get 90% accuracy on Fashion-MNIST [0:11:58](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=718s) - Jupyter notebooks and GPU memory [0:14:59](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=899s) - Autoencoder or Classifier [0:16:05](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=965s) - Why do we need a mean of 0 and standard deviation of 1? [0:21:21](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=1281s) - What exactly do we mean by variance? [0:25:56](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=1556s) - Covariance [0:29:33](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=1773s) - Xavier Glorot initialization [0:35:27](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2127s) - ReLU and Kaiming He initialization [0:36:52](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2212s) - Applying an init function [0:38:59](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2339s) - Learning rate finder and MomentumLearner [0:40:10](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2410s) - What’s happening is in each stride-2 convolution? [0:42:32](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2552s) - Normalizing input matrix [0:46:09](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2769s) - 85% accuracy [0:47:30](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2850s) - Using with_transform to modify input data [0:48:18](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=2898s) - ReLU and 0 mean [0:52:06](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=3126s) - Changing the activation function [0:55:09](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=3309s) - 87% accuracy and nice looking training graphs [0:57:16](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=3436s) - “All You Need Is a Good Init”: Layer-wise Sequential Unit Variance [1:03:55](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=3835s) - Batch Normalization, Intro [1:06:39](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=3999s) - Layer Normalization [1:15:47](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=4547s) - Batch Normalization [1:23:28](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=5008s) - Batch Norm, Layer Norm, Instance Norm and Group Norm [1:26:11](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=5171s) - Putting all together: Towards 90% [1:28:42](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=5322s) - Accelerated SGD [1:33:32](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=5612s) - Regularization [1:37:37](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=5857s) - Momentum [1:45:32](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=6332s) - Batch size [1:46:37](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=6397s) - RMSProp [1:51:27](https://www.youtube.com/watch?v=vGsc_NbU7xc&t=6687s) - Adam: RMSProp plus Momentum - Timestamp notes collapsed:: true - {{youtube-timestamp 409}} sab sir ke upar se jaa raha hai :/ ; ActivationStats - {{youtube-timestamp 489}} Initalisation starts here; we will try to get to 10% error on fashion mnist - [leaderboard here](https://apps.apple.com/us/story/id1377753262) - {{youtube-timestamp 713}} show problems as seen in stats visual; this is also what @Andrej Karpathy showed and led to initialization techniques. O mean - 0-1 std deviation - {{youtube-timestamp 917}} interesting - clear notebook memory - {{youtube-timestamp 1014}} Glorot/Xavier init and basic stats - {{youtube-timestamp 1579}} covariance - {{youtube-timestamp 1791}} xavier init derivation - {{youtube-timestamp 2013}} xavier doens't work for us! Kaiming/He init - {{youtube-timestamp 2243}} applying the init with mode.apply() - {{youtube-timestamp 2591}} it didn't work - {{youtube-timestamp 2659}} we need to noramalize the input as well! 0 1 mean and stddev; Batch Trasnform callback; to transform every batch - {{youtube-timestamp 2824}} MILESTONE - from scratch, 11 notebooks, we have a real convolution neural network that is properly training - {{youtube-timestamp 3318}} nice looking training graphs - {{youtube-timestamp 3587}} All you need is a good init - LSUV (Layer wise sequential Unit-Variance) - {{youtube-timestamp 3874}} Batch Normalization - {{youtube-timestamp 5187}} let's put it all together - towards 90% - {{youtube-timestamp 5329}} creating our own SGD class - {{youtube-timestamp 5670}} whiteboard - loss function - adding square of weights - {{youtube-timestamp 6053}} momentum stuff = :/ - {{youtube-timestamp 6084}} whiteboard on momentum being useful - {{youtube-timestamp 6694}} RMSProp + Momentum = Adam - - - - - - Lesson Resources & Papers collapsed:: true - https://paperswithcode.com/sota/image-classification-on-fashion-mnist - [Discuss this lesson](https://forums.fast.ai/t/lesson-17-official-topic/102602) - [Understanding the difficulty of training deep feedforward neural networks - Xavier Glorot, Yoshua Bengio](http://proceedings.mlr.press/v9/glorot10a) - [Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification - Kaiming He et al](https://arxiv.org/abs/1502.01852) - [LSUV - All you need is a good init - Dmytro Mishkin, Jiri Matas](https://arxiv.org/abs/1511.06422) - [Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift - Sergey Ioffe, Christian Szegedy](https://arxiv.org/abs/1502.03167) - [Layer Normalization - Ba, Kiros, Hinton](https://arxiv.org/abs/1607.06450) - [Lecture 18 - Accelerated SGD & ResNets](https://course.fast.ai/Lessons/lesson18.html) collapsed:: true - {{video https://www.youtube.com/watch?v=nlVOG2Nzc3k}} - YT Chapters collapsed:: true - [0:00:00](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=0s) - Accelerated SGD done in Excel [0:01:35](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=95s) - Basic SGD [0:10:56](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=656s) - Momentum [0:15:37](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=937s) - RMSProp [0:16:35](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=995s) - Adam [0:20:11](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=1211s) - Adam with annealing tab [0:23:02](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=1382s) - Learning Rate Annealing in PyTorch [0:26:34](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=1594s) - How PyTorch’s Optimizers work? [0:32:44](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=1964s) - How schedulers work? [0:34:32](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=2072s) - Plotting learning rates from a scheduler [0:36:36](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=2196s) - Creating a scheduler callback [0:40:03](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=2403s) - Training with Cosine Annealing [0:42:18](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=2538s) - 1-Cycle learning rate [0:48:26](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=2906s) - HasLearnCB - passing learn as parameter [0:51:01](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=3061s) - Changes from last week, /compare in GitHub [0:52:40](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=3160s) - fastcore’s patch to the Learner with lr_find [0:55:11](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=3311s) - New fit() parameters [0:56:38](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=3398s) - ResNets [1:17:44](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=4664s) - Training the ResNet [1:21:17](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=4877s) - ResNets from timm [1:23:48](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=5028s) - Going wider [1:26:02](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=5162s) - Pooling [1:31:15](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=5475s) - Reducing the number of parameters and megaFLOPS [1:35:34](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=5734s) - Training for longer [1:38:06](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=5886s) - Data Augmentation [1:45:56](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=6356s) - Test Time Augmentation [1:49:22](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=6562s) - Random Erasing [1:55:55](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=6955s) - Random Copying [1:58:52](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=7132s) - Ensembling [2:00:54](https://www.youtube.com/watch?v=nlVOG2Nzc3k&t=7254s) - Wrap-up and homework - Timestamp Notes - {{youtube-timestamp 0}} starts with Excel :) - OMG - this is the most profound lecture I have been waiting for. The spelled out machine learning all in Excel! - {{youtube-timestamp 405}} row 1 calc ends; brilliant - first iteration of machine learning, and we repeat - {{youtube-timestamp 473}} end of first epoch - {{youtube-timestamp 523}} so what we could do - fire up Tech/Visual Basic lol! to repeat epochs - {{youtube-timestamp 604}} my god; we have machine learning with graphs and visuals - {{youtube-timestamp 662}} momentum to learn things faster - {{youtube-timestamp 1231}} scheduler - it's all optimization you see to accelerate the learning hmm - {{youtube-timestamp 1398}} Excel ends - onto notebook now - BREAK HERE - {{youtube-timestamp 1610}} pytorch optimizer - - - - - - TODO [Lecture 19](https://course.fast.ai/Lessons/lesson19.html) - TODO [Lecture 20](https://course.fast.ai/Lessons/lesson20.html) - TODO [Lecture 21](https://course.fast.ai/Lessons/lesson21.html) - TODO [Lecture 22](https://course.fast.ai/Lessons/lesson22.html) - TODO [Lecture 23](https://course.fast.ai/Lessons/lesson23.html) - TODO [Lecture 24](https://course.fast.ai/Lessons/lesson24.html) - [Lecture 25 - Latent Diffusion](https://course.fast.ai/Lessons/lesson25.html) - fun audio generation from spectogram. #revisit collapsed:: true - {{video https://www.youtube.com/watch?v=8AgZ9jcQ9v8&t=154s}} - - Course Wrap Up - SD was not fully done. Stable Diffusion to be continued to next part. Jeremy will start with clip model. - - - #revisit collapsed:: true - Struggling with miniai library export :( - Lecture 11 Broadcasting deep dive - Lecture 12 homework - Exporting code from notebooks - Global Resources collapsed:: true - Course Repo - https://github.com/fastai/course22p2 - FastAI C22 Part 1 - https://arxiv.org/abs/1802.01528 - https://lambdalabs.com/blog/how-to-fine-tune-stable-diffusion-how-we-made-the-text-to-pokemon-model-at-lambda - [Fastcore library](https://fastcore.fast.ai) - [series on neural networks 25](https://www.youtube.com/playlist?list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi) by 3blue1brown; a little too spelled out for me now; but the grpahics are great. I need to revisit lec 3 and 4 - [Paperswithcode](https://paperswithcode.com) - https://xl0.github.io/lovely-tensors/ - - TODO watch collapsed:: true - {{video https://www.youtube.com/watch?v=XkY2DOUCWMU&list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab&index=4}} - [What is `torch.nn` really?](https://pytorch.org/tutorials/beginner/nn_tutorial.html) - - [Excel workbooks](https://github.com/fastai/course22/tree/master/xl)